Does FPGA use define verification and debug?

You may be aware that we have run a first survey on FPGA design, debug and verification during the last month.

(By the way, many thanks to our respondents – we’ll announce the Amazon Gift Card winner in September).

In this survey, we did some ‘population segmentation’, and first asked about the primary reason for using FPGA:

– Do you use FPGA as a target technology? In other words are FPGA part of your final product’s BOM (bill of materials) list? … – or:

– Do you use FPGA as a prototyping technology for an ASIC or a SoC… ?

49 respondents were found in the first group (which we’ll also call the ‘pure FPGA’ users) and 34 in the second group. The survey was posted during about one month online and advertised on social networks. The questions were in English.

Behind this segmentation question is of course the idea that the design flow used to design FPGA is usually significantly different (and shorter) than this used to design an ASIC.

(This is claimed by FPGA vendors as one of the significant differences between ASIC and FPGA – you can see an example of this comparison here).

Since we care (a lot) about FPGA debug and verification, one of the survey questions was about the set of tools currently used for FPGA debug and verification

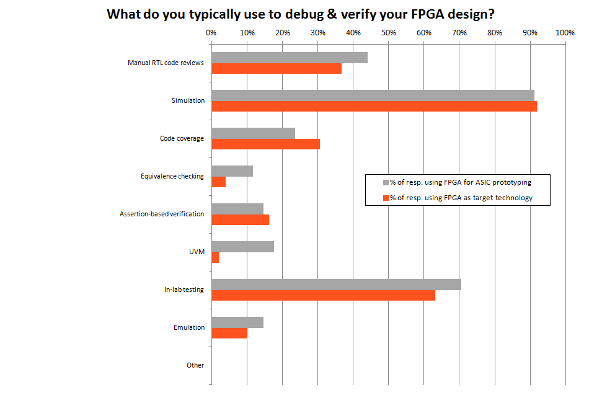

The chart below illustrates the percentage of respondents in each group who selected each proposed tool or methodology. They had been invited to select all choices that applied to them (click on the picture to enlarge).

A podium shared by simulation, in-lab testing and RTL code reviews

Without significant difference between the 2 groups, simulation is the clear winner methodology, with a little more than 90% use. Frankly, we wonder how it is still possible not to simulate a FPGA design today – we have not analyzed the cases when designers are able to skip this step.

In-lab testing comes second, with near to 70% usage among the respondents. In-lab testing actually includes 2 categories of tools in the survey:

– traditional instruments -such as scopes and logic analyzers- and

– embedded logic analyzers -such as Xilinx Chipscope, Altera Signal Tap and the likes.

Manual RTL code review closes the podium. We expect that any designer actually reviews his/her code. Why is it not 100%? Probably because the ones verifying and debugging FPGA are not the RTL coders. Another possibility is that ‘reviewing the code’ might not be seen by many as a ‘verification technique’, but as the result of the process of verification and debug.

The case of code coverage

While this technique has been around for quite some time now (and is actually complementary to simulation), it is interesting to see that code coverage has got the same popularity in our 2 groups (we do not think that the difference between the groups is significant here).

It has been widely said that ‘pure FPGA designers’ are careless and do not use the same advanced and rigorous techniques of ASIC designers. Well, apparently, they do not just care about simulating some cases; they have the same level of concern for properly covering the code as ASIC designers.

Assertions: YES

UVM : not for ‘pure’ FPGA designers

UVM is quite a hot topic today, with most of the major EDA companies actively promoting this methodology. For those unfamiliar with the subject, here is brief overview. It seems to currently have a quite positive upwards trend for ASIC and SoC design. Tools and environments are appearing on the market, that can be applied to FPGA design too. Like we see on the chart, UVM has not really gained traction among our ‘pure FPGA’ respondents, although near to one engineer on 5 among our ‘ASIC engineers’ has adopted this technique.

Takeaways

1) In-lab testing is a well-entrenched habit of the FPGA designer. And there seems to be no notable difference between our groups. Like we pointed out in a previous publication, in-lab FPGA test and debug is here to stay. We simply believe that – for FPGA at least – this technique must and can be improved.

2) The FPGA engineer seems to be using a mix of methodologies when it comes to debug and verification, but not necessarily a lot of them.

Among our respondents, we have found that the average set of techniques used for debug and verification counts 2.58 different techniques (2.39 for the ‘pure FPGA players’ against 2.68 for the ASIC designers).

And – please note: simulation is there of course, often completed with code coverage.

‘The FPGA engineer who does not simulate at all’ appears to be just a myth today…

3) There is no notable difference between designers using FPGA as a target technology and those using FPGA as a prototyping technology- except for:

– Equivalence checking -and:

– UVM

… which seem to have a very limited adoption among the ‘pure FPGA players’.

In a later post, we’ll further analyze the results of our survey. We have shown which techniques are currently used for debug and verification.

Of course, the million dollar question is whether FPGA engineers are satisfied with this situation or if they are in need of improvements… Stay tuned.

Thank you for reading.

– Frederic