What do you use FPGA prototyping for? Typical usages of FPGA prototyping FPGA Prototyping is used for various purposes. Here are 2 of its main usages: FPGA Debug: at some point of the FPGA design cycle, tests have to be run with a 'real' FPGA board toRead more →

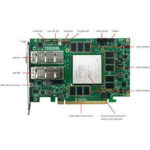

What are the key features of ideal ASIC prototypes? It seems that there has never been a better time to prototype IP, ASIC or SoC with FPGAs. With 35 to 40 billions transistors, the largest FPGA devices on the market can certainly hold quite some share ofRead more →

Delivering High Quality Semiconductor IP with confidence Because they are the essential building blocks of modern ASIC and SoC chips, semiconductor IPs are used in a wide variety of environments, in which they are in service during extended times. Verifying that they run flawlessly in all theseRead more →

Is FPGA Prototyping really optional? We conducted a survey on LinkedIn 2 weeks ago about the usage of FPGA prototyping vs. Emulation vs. Simulation. By no means this survey is representative of the whole industry - the sample is simply too small and probably biaised, as theRead more →

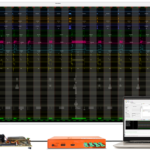

Upgrading FPGA Prototyping for High RTL Debug Productivity The importance of FPGA prototyping Despite important advances in simulation-based validation and emulation, ASIC engineers worldwide keep on using FPGA prototyping systems. Earlier this year, we have seen the launch of a new generation of such systems from multipleRead more →

Choosing the ideal FPGA prototype for ASIC and SoC design - White Paper. In the 2020 edition of the Wilson Research Group Verification Survey, Mentor Graphics, a Siemens Business, shows that at least 30% of all respondents designing ASIC or SoC declare using FPGA prototyping, no matterRead more →

The FPGA Prototyping problem we are trying to solve 'A la Carte Menu' or 'Full Course Dinner'? Today, choosing a FPGA-based prototyping platform for ASIC or SoC design reduces to 2 choices: - Either you buy or build a FPGA board and choose EDA tools separately; or:Read more →

On-Demand Webinar: 'How to capture Gigabytes of traces from FPGA. At speed.' In this post, you have the opportunity to catch up with our Webinar that ran live earlier this year. In this -now 'on-demand'- webinar we introduce and demonstrate EXOSTIV and show how it can boostRead more →

Does FPGA use define verification and debug? You may be aware that we have run a first survey on FPGA design, debug and verification during the last month. (By the way, many thanks to our respondents – we’ll announce the Amazon Gift Card winner in September). In

Read more →