You can't fix what you don't see (Download PDF version) Whether you're designing a single IP, an FPGA-based product, a CPU coprocessor, an ASIC, or a System on Chip (SoC), your product must work as intended—ideally flawlessly, though "good enough" often applies. The process starts with aRead more →

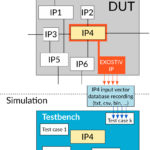

Exostiv boosts RTL simulation It is essential to reduce the wasted machine cycles used for simulation workloads. Simulation dominates ASIC/SoC/FPGA verification process 'The 2020 Wilson Research Group ASIC and FPGA Functional Verification Study' reports that an ASIC, SoC or FPGA designer can spend up to 40% ofRead more →

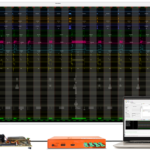

You can capture tons of data. Now what? Offering huge new capabilities is not always seen positively. Sometimes, engineers come to us and ask: 'Now that I am able to collect Gigabytes of trace data from FPGA running at speed... how do I analyze that much data?'.Read more →

10 cool things about us... #1 Our waveform viewer commonly processes Gigabytes of waves without lagging That's because we have to display data recorded from #FPGA in operation during seconds, minutes or even hours, not just a window of waves from a simulation. So we needed toRead more →

The FPGA Prototyping problem we are trying to solve 'A la Carte Menu' or 'Full Course Dinner'? Today, choosing a FPGA-based prototyping platform for ASIC or SoC design reduces to 2 choices: - Either you buy or build a FPGA board and choose EDA tools separately; or:Read more →

On-Demand Webinar: 'How to capture Gigabytes of traces from FPGA. At speed.' In this post, you have the opportunity to catch up with our Webinar that ran live earlier this year. In this -now 'on-demand'- webinar we introduce and demonstrate EXOSTIV and show how it can boostRead more →

‘My FPGA debug and verification flow should be improved…’ In my last post, I revealed some of the results of our recent survey on FPGA. These results depicted a ‘flow-conscious’ FPGA engineer, using a reduced mix of methodologies in the flow and very prone to going to

Read more →Does FPGA use define verification and debug? You may be aware that we have run a first survey on FPGA design, debug and verification during the last month. (By the way, many thanks to our respondents – we’ll announce the Amazon Gift Card winner in September). In

Read more →