What are the key features of ideal ASIC prototypes? It seems that there has never been a better time to prototype IP, ASIC or SoC with FPGAs. With 35 to 40 billions transistors, the largest FPGA devices on the market can certainly hold quite some share ofRead more →

Exostiv Blade - Managing multiple sites, targets & users In this video, we demonstrate that Exostiv Blade lets you manage multiple sites, target boards and users to reach your FPGA debug, verification and test goals. In a previous demonstration, we already showed that Exostiv Blade core capabilitiesRead more →

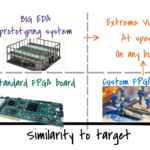

Upgrading FPGA Prototyping for High RTL Debug Productivity The importance of FPGA prototyping Despite important advances in simulation-based validation and emulation, ASIC engineers worldwide keep on using FPGA prototyping systems. Earlier this year, we have seen the launch of a new generation of such systems from multipleRead more →

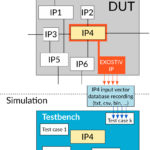

Exostiv boosts RTL simulation It is essential to reduce the wasted machine cycles used for simulation workloads. Simulation dominates ASIC/SoC/FPGA verification process 'The 2020 Wilson Research Group ASIC and FPGA Functional Verification Study' reports that an ASIC, SoC or FPGA designer can spend up to 40% ofRead more →

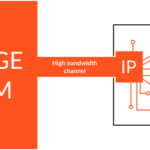

Record FPGA data during 1 hour - really. As ASIC, SoC and FPGA engineers, we are used to watching the operation of our designs based on single limited snapshots. RTL simulations, for instance, provide bit-level details during execution times that span over a few (milli)seconds at best.Read more →

Choosing the ideal FPGA prototype for ASIC and SoC design - White Paper. In the 2020 edition of the Wilson Research Group Verification Survey, Mentor Graphics, a Siemens Business, shows that at least 30% of all respondents designing ASIC or SoC declare using FPGA prototyping, no matterRead more →

10 cool things about us... #1 Our waveform viewer commonly processes Gigabytes of waves without lagging That's because we have to display data recorded from #FPGA in operation during seconds, minutes or even hours, not just a window of waves from a simulation. So we needed toRead more →

FPGA prototyping platform gets visibility with EXOSTIV This month, thanks to AVNET Israel, we received the ONIX platform for interoperability tests. The board that we received was the AVT-ONIX-VU440-1, equipped with 1 Xilinx Virtex Ultrascale XCVU440 device. The ONIX board system is designed by DgTronix in IsraelRead more →

EXOSTIV lets you peer deeper into FPGA Watch now... EXOSTIV Introduction EXOSTIV's structure (see below) allows deeper data capture from inside FPGA: unlike JTAG instrumentation, EXOSTIV provides an external storage that extends beyond the memory available in the FPGA. Coupled with the usage of transceivers, it createsRead more →

Announcing… EXOSTIV for Intel FPGA Using Intel FPGA? We have exciting news for you: EXOSTIV will soon support Intel FPGA! Please check the pictures above and below – this is EXOSTIV working with the ‘Attila’ dev kit of our partner, Reflex-CES, equipped with one Arria 10 GX

Read more →Does FPGA use define verification and debug? You may be aware that we have run a first survey on FPGA design, debug and verification during the last month. (By the way, many thanks to our respondents – we’ll announce the Amazon Gift Card winner in September). In

Read more →