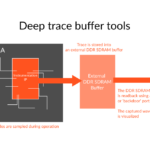

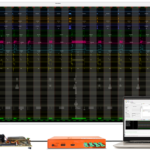

Announcing… EXOSTIV for Intel FPGA Using Intel FPGA? We have exciting news for you: EXOSTIV will soon support Intel FPGA! Please check the pictures above and below – this is EXOSTIV working with the ‘Attila’ dev kit of our partner, Reflex-CES, equipped with one Arria 10 GX

Read more →‘My FPGA debug and verification flow should be improved…’ In my last post, I revealed some of the results of our recent survey on FPGA. These results depicted a ‘flow-conscious’ FPGA engineer, using a reduced mix of methodologies in the flow and very prone to going to

Read more →Does FPGA use define verification and debug? You may be aware that we have run a first survey on FPGA design, debug and verification during the last month. (By the way, many thanks to our respondents – we’ll announce the Amazon Gift Card winner in September). In

Read more →